Advertisement

We've all seen how artificial intelligence tools have moved from being curious to essential tools in daily life. Whether it's helping answer questions, write content, or summarize documents, speed plays a huge role. That's where Claude 3 Haiku stands out—not for being the biggest, but for being the fastest. It’s Anthropic’s lightest model in the Claude 3 family, but it handles tasks with surprising agility. If you're someone who needs results without the lag, this one’s built for you. Let’s break it down.

Claude 3 Haiku isn’t just “fast for an AI model”—it’s fast, period. Speed, in this case, comes from how the model is designed and optimized under the hood. Anthropic didn’t just strip down a larger model; they trained Haiku with specific goals in mind: fast outputs, low latency, and the ability to stay responsive even with a large stream of requests.

Token processing speed is where it really wins. Reports show that Claude 3 Haiku can process up to 21,000 tokens per second. That’s not a typo. This puts it far ahead of most other models in its size range. It’s not only capable of handling large documents, but it does so in a way that doesn’t break the flow. You don’t have to sit around waiting for it to catch up.

Another reason it runs so quickly is memory efficiency. Haiku is trained to understand inputs without using unnecessary computing power. This keeps latency low and makes it more reliable for time-sensitive tasks. Whether it’s used in a customer support chatbot or a coding assistant, that reduced lag time means a smoother experience for the user.

One of the best things about Haiku is that it can handle the same 200,000-token context window as its larger siblings. That’s massive. It means you can feed it entire books, multi-threaded email chains, or piles of internal documents—and it won’t miss a beat. This makes it a favorite among businesses and researchers who deal with long texts regularly.

Speed aside, Haiku performs well on regular tasks, too. For instance, it scores above GPT-3.5 on multiple benchmarks involving reading comprehension, math, and coding. That's not just marketing—it actually solves things in fewer steps and makes fewer errors.

Yes, Claude 3 Haiku also supports vision inputs. You can give it images, and it can respond with analysis, summaries, or answers based on the content. While this feature used to be restricted to larger models, Haiku brings it in without slowing down.

You don’t need to overhaul your workflow to use Claude 3 Haiku well. It’s more about knowing where it fits and how to prompt it. It works best in fast-paced settings like customer support or when summarizing long content quickly. Start by identifying the task—especially ones where speed matters more than deep technical depth. Use clear, natural prompts. No need for complex formatting. Simple requests like “Summarize this in 3 points” or “List key complaints from this review thread” usually work best.

While it handles large inputs, you can shape the output by setting limits—“Keep it under 150 words” helps when you need a tight response. If images are involved, like scanned docs or charts, just include them with your question. Haiku connects visuals to context well, making it useful for tasks like invoice checks or basic visual analysis. For highly technical subjects, a human review is still smart. But for everyday tasks, Haiku handles things smoothly on its own.

Claude 3 Haiku hasn’t just made waves because of speed—it’s been tested, benchmarked, and analyzed by those who care about the details. If you’re the type who likes numbers over anecdotes, this section is for you.

In head-to-head evaluations, Haiku performs above average on many tasks usually reserved for larger models. For example, the MMLU (Massive Multitask Language Understanding) benchmark—a test that covers subjects from law to math—outpaces GPT-3.5 in several categories. It's not just answering quickly but getting the right answers more often.

Despite being the smallest in the Claude 3 lineup, Haiku shows surprisingly solid results in basic and intermediate-level math problems. It also holds up in code-related tasks, especially ones that rely more on pattern recognition than deep architecture reasoning. So, while it's not a substitute for high-level programming tools, it handles everyday developer needs like summarizing code, rewriting functions, or finding small logic issues.

When tested with image-based tasks, Claude 3 Haiku shows decent competence. It’s able to read charts, identify patterns in screenshots, and explain visual layouts. This performance adds a layer of usefulness for teams working with visual data, especially in cases where speed is more critical than nuance.

Latency is a dealbreaker for anyone building applications or plugins around AI. Haiku's architecture supports high-throughput, low-lag interactions, making it a solid fit for apps that require instant AI responses. Whether you're integrating with a web app or building automation, its lightweight structure keeps operations smooth without server strain.

Claude 3 Haiku isn't trying to compete with the biggest models in power. It's built for speed, stability, and practical performance. If you've ever needed answers fast but didn't want to trade quality, this model hits that balance. It's light but not lightweight. It's small but not stripped. And for the tasks, people actually use AI every day, and it delivers. So, if you've been waiting for something that doesn't make you wait—Claude 3 Haiku is already here. Stay tuned for more!

Advertisement

Spending hours in VS Code? Explore six of the most useful ChatGPT-powered extensions that can help you debug, learn, write cleaner code, and save time—without breaking your flow.

Learn how to create professional videos with InVideo by following this easy step-by-step guide. From writing scripts to selecting footage and final edits, discover how InVideo can simplify your video production process

Discover how Snowflake empowers EdTech vendors with real-time data, AI tools, and secure cloud solutions for smarter learning

Thinking of running an AI model on your own machine? Here are 9 pros and cons of using a local LLM, from privacy benefits to performance trade-offs and setup challenges

Ever wondered if your chatbot is keeping secrets—or spilling them? Learn how model inversion attacks exploit AI models to reveal sensitive data, and what you can do to prevent it

Looking for an AI that delivers fast results? Claude 3 Haiku is designed to provide high-speed, low-latency responses while handling long inputs and even visual data. Learn how it works

AWS SageMaker suite revolutionizes data analytics and AI workflows with integrated tools for scalable ML and real-time insights

Multimodal artificial intelligence is transforming technology and allowing smarter machines to process sound, images, and text

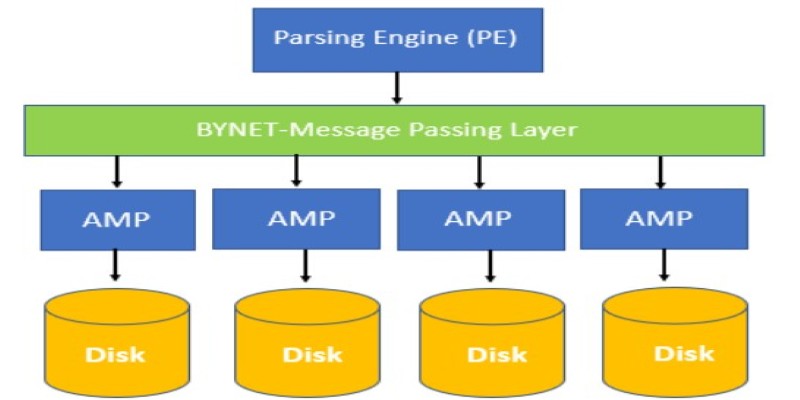

Find out the concepts of Teradata, including its architecture, key features, and real-world uses. Learn why Teradata remains a reliable choice for large-scale data management and analytics

How can AI make your life easier in 2025? Explore 10 apps that simplify tasks, improve mental health, and help you stay organized with AI-powered solutions

What makes Google Maps so intuitive in 2025? Discover how AI features like crowd predictions and eco-friendly routing are making navigation smarter and more personalized.

Heard about on-device AI but not sure what it means? Learn how this quiet shift is making your tech faster, smarter, and more private—without needing the cloud