Advertisement

AI isn’t some distant, futuristic idea anymore—it’s in your pocket, on your wrist, and even woven into the apps you use every day. You might already be using it without knowing it. But here’s something you might not have heard much about: on-device AI. It works differently from the cloud-based systems people are more familiar with. Instead of sending your data somewhere else to be processed, it keeps everything on your device.

This shift may seem small, but it changes a lot. It means quicker actions, less waiting, and better privacy. But those are just the surface benefits. On-device AI is quietly reshaping how devices think, respond, and help us out, all while staying local.

Understanding why on-device AI matters helps one look at what came before it. Most AI systems have relied on cloud computing. Say you ask your phone a question or use an app to translate a sentence—your device sends that request to a distant server, waits for it to process the data, and then receives the result. It works, but it's not instant. And if your internet signal cuts out? You're stuck.

With on-device AI, none of that back-and-forth happens. Everything is processed right on your phone, tablet, or smartwatch. It doesn’t need to check with a distant server—it already has what it needs. This makes tasks faster and smoother, and it allows features to work without an internet connection.

There’s also a shift in how personal data is treated. Since nothing is sent out, the information stays on your device. That alone is a meaningful improvement for anyone concerned about how much of their digital life ends up stored on external servers. On-device AI helps reduce that risk.

For AI to work directly on your device, the device has to be built for it. That’s where specialized hardware comes into play. Newer smartphones, laptops, and even headphones come with chips built to handle AI tasks. These aren’t general-purpose processors—they’re designed to support things like image recognition, voice commands, and smart predictions without overheating or draining the battery.

These chips go by different names depending on the brand: neural engines, AI cores, NPUs (neural processing units), and more. But whatever they’re called, the idea is the same. They make it possible for devices to do things that used to require cloud support.

One area where these chips shine is machine learning. Once an AI model is trained (usually in the cloud), the model is loaded onto your device. From there, the chip runs it efficiently—so your phone can recognize a song playing nearby or improve a blurry photo without sending anything out.

This isn’t just about power—it’s about practicality. Devices that can think for themselves are quicker, more responsive, and less reliant on constant network access. And because the work is done locally, it frees up network bandwidth and helps your apps run without lag.

Even if you’ve never heard the term “on-device AI,” chances are it’s already working behind the scenes in the tools you use daily. Here are just a few ways it’s been quietly integrated into real life:

Voice assistants: Wake word detection like “Hey Siri” or “Hey Google” is often handled right on the device now. That’s why your phone can respond instantly, even if you're offline.

Photography and video: Cameras can now adjust lighting, detect faces, and apply effects in real-time, thanks to AI built into the phone. You’re not waiting for cloud processing—everything happens the moment you tap the shutter.

Typing suggestions: Autocorrect and predictive text features are now smarter because they learn from how you type. The model lives on your phone, so it adapts over time privately.

Health tracking: Smartwatches use local AI to monitor things like heart rhythms, step patterns, and sleep stages. They can even flag irregularities—all without needing to ping a server.

Live translation: Some language apps offer camera-based translation that works without data. You point your phone at a sign in another language, and it instantly shows the translated version—no Internet needed.

These examples show how the shift to local AI makes daily tasks feel more natural. You don’t have to think about it working—it just does.

As more companies focus on privacy, speed, and independence, on-device AI is becoming more than just a feature—it’s a design decision. Building smart features into the device itself creates a new kind of user experience: one that’s responsive, private, and less dependent on outside infrastructure.

There’s also the matter of scale. If millions of users are relying on cloud servers for every interaction, that’s a massive load on data centers and networks. Local AI takes some of that pressure off. Devices do more of the work themselves, which means less data traffic, lower server costs, and reduced energy use in the cloud.

For users, this leads to tech that feels smarter without being intrusive. The phone learns your habits, the keyboard guesses what you're trying to say, and the camera knows what to focus on. And it all happens quietly, without demanding attention or sending your data anywhere.

What’s more, as hardware gets more capable, the kinds of things AI can do on-device will only grow. In the near future, we might see phones that edit video intelligently, laptops that summarize documents without needing an app, and earbuds that translate in real-time during conversations—all without needing to connect to anything outside the device.

On-device AI may not be as flashy as some other tech headlines, but it’s making a real difference in how technology fits into everyday life. It’s the kind of shift that users don’t always notice—but they feel it. Things are faster. Smarter. More personal. And more private.

What started as a technical improvement is now becoming the standard. Devices that can think for themselves offer a smoother, more reliable experience. No waiting, no uploading—just tech that works when you need it. And that’s exactly what AI was supposed to be from the beginning.

Advertisement

Not sure how Natural Language Processing and Machine Learning differ? Learn what each one does, how they work together, and why it matters when building or using AI tools.

Multimodal artificial intelligence is transforming technology and allowing smarter machines to process sound, images, and text

What makes Google Maps so intuitive in 2025? Discover how AI features like crowd predictions and eco-friendly routing are making navigation smarter and more personalized.

Discover how GenAI transforms supply chain management with smarter forecasting, inventory control, logistics, and risk insights

Explore the top 12 free Python eBooks that can help you learn Python programming effectively in 2025. These books cover everything from beginner concepts to advanced techniques

Not all AI works the same. Learn the difference between public, private, and personal AI—how they handle data, who controls them, and where each one fits into everyday life or work

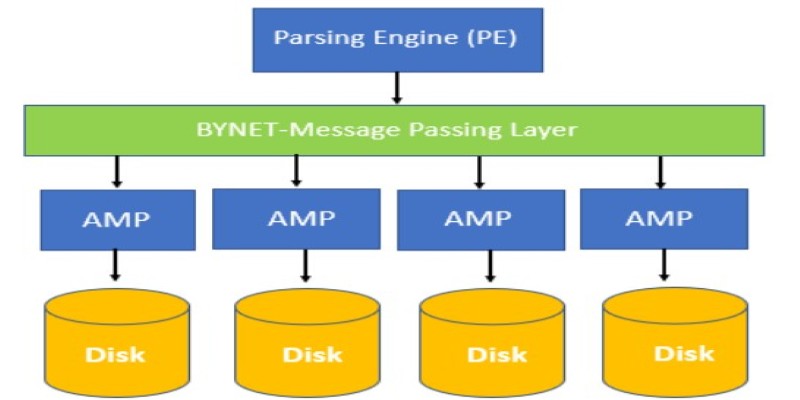

Find out the concepts of Teradata, including its architecture, key features, and real-world uses. Learn why Teradata remains a reliable choice for large-scale data management and analytics

Heard about on-device AI but not sure what it means? Learn how this quiet shift is making your tech faster, smarter, and more private—without needing the cloud

Spending hours in VS Code? Explore six of the most useful ChatGPT-powered extensions that can help you debug, learn, write cleaner code, and save time—without breaking your flow.

Learn how to create professional videos with InVideo by following this easy step-by-step guide. From writing scripts to selecting footage and final edits, discover how InVideo can simplify your video production process

What if an AI could read, plan, write, test, and submit code fixes for GitHub issues? Learn about SWE-Agent, the open-source tool that automates the entire process of code repair

Wondering how to turn a single image into a 3D model? Discover how TripoSR simplifies 3D object creation with AI, turning 2D photos into interactive 3D meshes in seconds